We must expand human rights to cover neurotechnology

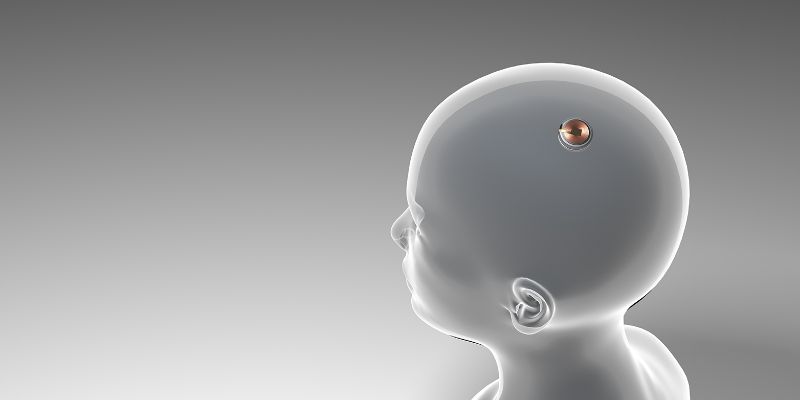

It is no longer a utopian idea to establish a direct connection between the human brain and a computer in order to record and influence brain activity. Scientists have been working on the development of such brain-computer interfaces for years. The recent pompous announcements by Elon Musk's company Neuralink have probably received the most media attention. But countless other research projects around the world are developing technological solutions to better understand the structure and function of the human brain and to influence brain processes in order to treat neurological and mental disorders, such as Parkinson’s disease, schizophrenia and depression. The end goal is unlocking the enigma of the human brain, which is one of the grandest scientific challenges of our time.

The diagnostic, assistive and therapeutic potential of brain-computer interfaces and neurostimulation techniques and the hopes placed in them by people in need are enormous. Since nearly 1 in 4 of world’s population suffer from neurological or psychiatric disorders, such neurotechnologies hold promise for alleviating human suffering. However, the potential of these neurotechnologies for misuse is just as great, which raises qualitatively different and unprecedented ethical issues1,2. Therefore, the corresponding challenge for science and policy is ensuring that such much-needed innovation is not misused but responsibly aligned with ethical and societal values in a manner that promotes human wellbeing.

Accessing a person’s brain activity

Whether, or under what conditions, is it legitimate to access or interfere with a person’s brain activity? When we as ethicists deal with new technologies like these, we find ourselves walking a delicate tightrope between accelerating technological innovation and clinical translation for the benefit of patients, on the one hand, and ensuring safety by preventing unintended adverse effects, on the other hand. This is not easy. When it comes to new technologies, we are always stuck in a fundamental quandary: the social consequences of a novel technology cannot be predicted while the technology is still in its infancy; however, by the time undesirable consequences are discovered, the technology is often so much entrenched in the society that its control is extremely difficult.

This quandary can be illustrated by social media. When the first social media platforms were established, in the early 2000s, their mid-to-long term ethical and societal implications were unknown. Over fifteen years later, we now have extensive information about the undesirable consequences these platforms can cause: spread of fake news, emergence of filter bubbles, political polarization, and risk of online manipulation3. However, these technologies are now so entrenched in our societies that elude any attempt to realign, modify, regulate, and control them.

Today, we are facing this very same dilemma with several emerging technologies, including brain-computer interfaces and other neurotechnologies. In fact, these technologies are no longer confined to the medical domain (where they have to comply with strict regulations and ethical guidelines) but have already spillovered to a number of other fields such as the consumer market, the communication and transportation industry, and even law enforcement and the military sector. Outside the lab and the clinics, these technologies are often in a regulatory unowned land.

When it comes to neurotechnology, we cannot afford this risk. This is because the brain is not just another source of information that irrigates the digital infosphere, but the organ that builds and enables our mind. All our cognitive abilities, our perception, memories, imagination, emotions, decisions, behaviour are the result of the activity of neurons connected in brain circuits.

Impact on personal identity

Therefore neurotechnology, with its ability to read and write brain activity, promises, at least in principle, to be able one day to decode and modify the content of our mind. What’s more: brain activity and the mental life it generates are the critical substrate of personal identity moral and legal responsibility. Therefore, the reading and manipulation of neural activity through artificial intelligence (AI)-mediated neurotechnological techniques could have unprecedented repercussions on people’s personal identity and introduce an element of obfuscation in the attribution of moral or even legal responsibility.

To avoid these risks, anticipatory governance is needed. We cannot simply react to neurotechnology once potentially harmful misuses of these technologies have reached the public domain. In contrast, we have a moral obligation to be proactive and align the development of these technologies with ethical principles and democratically agreed societal goals.

From neuroethics to neurorights

To address the diversity and complexity of both neurotechnology and the ethical, legal and social implications they raise, a comprehensive framework is needed. Together with other scholars such as neuroscientist Rafael Yuste, I argued that ethics is paramount but the foundation of this governance framework for neurotechnologies should occur at the level of fundamental human rights. After all, mental processes are the quintessence of what makes us human.

Existing human rights may need to be expanded in scope and definition to adequately protect the human brain and mind. Legal scholar Roberto Adorno from the University of Zurich and I labelled these emerging human rights “neurorights”.4, 5 We proposed four neurorights:

- The right to cognitive liberty protects the right of individuals to make free and competent decisions regarding their use of neurotechnology. It guarantees individuals the freedom to monitor and modulate their brains or to do without. In other words, it is a right to mental self-determination.

- The right to mental privacy protects individuals against the unconsented intrusion by third parties into their brain data as well as against the unauthorized collection of those data. This right allows people to determine for themselves when, how, and to what extent their neural information can be accessed by others. Mental privacy is of particular relevance as brain data are becoming increasingly available due to consumer neurotechnology applications, hence become exposed to the same privacy and security risks as any other data.

- The right to mental integrity, which is already recognized by international law such as the European Charter of Fundamental Rights, may be broadened to ensure also the right of people with physical and/or mental disabilities to access and use safe and effective neurotechnologies as well as to protect them against unconsented and harmful applications.

- Finally, the right to psychological continuity intends to preserve people’s personal identity and the continuity of their mental life from unconsented alteration by third parties.

Neurorights are already reality in international policy

Neurorights are not just an abstract academic idea but a principle that has already landed in national and international politics. The Chilean parliament defined in a constitutional reform bill “mental integrity” as a fundamental human right, and passed a law that protects brain data and applies existing medical ethics to the use of neurotechnologies. Furthermore, the Spanish Secretary of State for AI has recently published a Charter of Digital Rights that incorporates neurororights as part of citizens' rights for the new digital era while the Italian Data Protection Authority devoted the 2021 Privacy Day to the topic of neurorights. The new French law on bioethics endorses the right to mental integrity as it permits the prohibition of harmful modifications of brain activity. Cognitive liberty and mental privacy are also mentioned in the OECD Recommendation on Responsible Innovation in Neurotechnology6. Last but certainly not least, the Council of Europe has launched a five-year Strategic Action Plan focused on human rights and new biomedical technologies, including neurotechnology. The aim of this program is to assess whether the ethical-legal issues raised by neurotechnology are sufficiently addressed by the existing human rights framework or whether new instruments need to be developed.

In order to exploit the great potential of neurotechnologies, but to avoid misuse, it is important to address the ethical and legal issues and to regulate neurotechnologies for the benefit of all people.